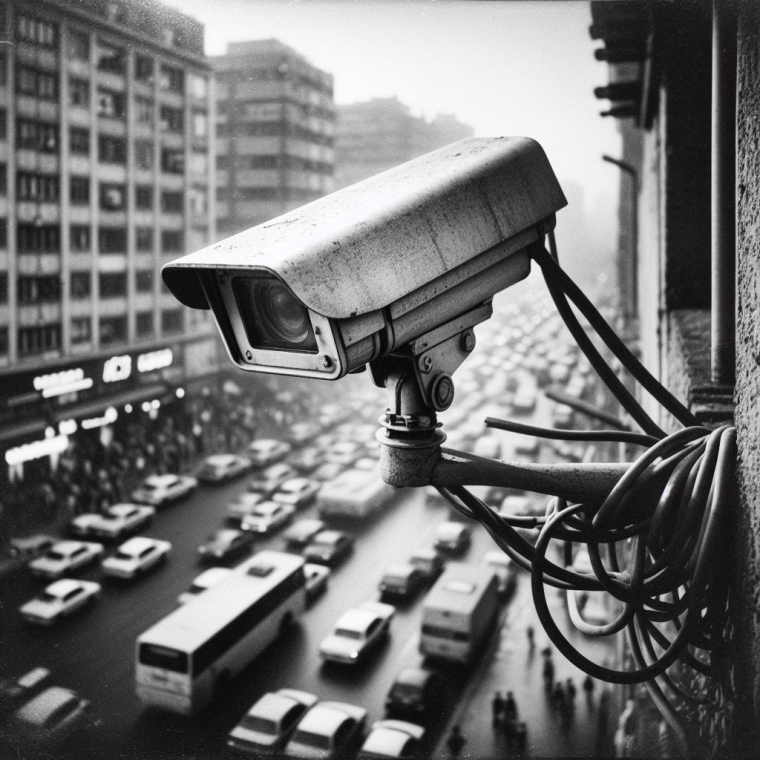

On April 4, the Office of the Privacy Commissioner announced an inquiry into supermarket operator Foodstuffs' use of facial recognition technology or FRT, in 25 locations across the North Island. A range of issues will be looked at, such as whether or not the trial complies with the Privacy Act, and how well the technology and the procedures around its use work.

To say facial recognition technology has negative connotations when used for surveillance of the public would be a huge understatement (and this isn't about using biometrics to secure eg. computers).

The New Zealand Herald reported over the weekend that a mother who had been shopping regularly at New World Westend in Rotorua was mistakenly identified as a trespassed thief. Interestingly enough, New World owner Foodstuffs blamed the humiliating ordeal for the customer - who is Māori - on a "genuine case of human error" and not the technology itself.

In this case, technology is used as an intermediary and arbiter, taking away the often scary need for lowly-paid supermarket staff to challenge alleged shoplifters, who can become aggressive in that situation. However technology is never infallible, and shouldn't be blindly relied upon. Particularly not FRT if it's used for policing the public.

Why's that? A quick Internet search will throw up multiple sources that point to the technology being racist. That's not opinion: it stems from official research showing that the technology performs poorly with non-white subjects, producing false positives. Which, if it needs to be spelt out, means innocent people are accused of crimes thanks to FRT.

In what is a grim irony, using facial recognition to openly target ethnic minorities seems to be a successful use-case for the technology as evidenced by the furore of Chinese authorities deploying it against the Uyghurs.

FRT is very powerful, and the lure of it is evident for authorities wanting to conduct surveillance at scale. Australians were outraged a few years ago when told the Federal Police use Clearview AI, a giant system that has been scraping billions of images of social media and other public sites, as a surveillance tool.

ClearView AI is used in the United States and other countries as well. It is extremely controversial and the company has been taken to court with its technology alleged to be abusive.

Controversy doesn't seem to dent authorities' enthusiasm for FRT. In Britain, the government is looking at spending £55 million on FRT including police scanner vans for high streets, as part of a shoplifting crackdown. Private fast food companies wanting to gather customer data on the sly to feed their artificial intelligence systems are also hopping aboard the FRT train.

There are some excellent uses of FRT, even when it comes to public surveillance, to ensure crowd safety for example.

As the Privacy Commissioner notes, supermarkets are essential services for people, and difficult to avoid if you're uncomfortable with FRT use, or feel targeted by it. Nevertheless, supermarkets feel the negative publicity the use of the technology generates is worth the trade-off as they struggle with increasing retail crime.

Retail crime is a deeper societal issue, which collective surveillance of shoppers is unlikely to remedy.

To be clear, the enthusiastic adoption of FRT surveillance worldwide strongly suggests it won't go away, but can it be regulated to avoid the worst abuses?

To submit on the Privacy Commissioner's inquiry, you can send comments to FRTinquiry@privacy.org.nz.

17 Comments

So many parallels with the UK Post Office scandal, as in the default position being that everyone is a potential criminal and technology can be trusted.

UK Post Ofice Scandal is and was appalling, Paula Vennels should enjoy a lengthy jail term and Fujitsu cough up significant compensation and penalties and be excluded from public contracts for 10 years. The Muslim Mayor of London Khans ULEZ scheme is in the same vein, fortunately the blade runners have destroyed many cameras even MPs have agreed with their action whilst probably illegal simply demonstrates that because something is illegal does not mean it is right and when citizens clearly revolt and protest failure to either convince or remove/ammend the laws lead to civil unrest and worse - we ware at 5 minutes to midnight already. The only way the major supermarket groups will change their polciy on facial erecognition cameras is when their customers protest in writing and back it up by refusing to shop with them - worked brillaintly with Anheasurer Busch - BUd light were sales have plummeted 90% with bar owners wanting to return stock beikng told to give it away and even that failed.

If people aren't afraid of A.I. by now I hope this wakes them up.

A.I. can be used quite effectively in dealing with "most cases". In this role it can be a money saver, hence the enthusiasm for it by big business & governments.

But what it can't do is deal with "all cases". And this is where serious risks enter the people systems.

Remember self driving cars? They've failed to deliver to the promises made because "most cases" isn't enough to protect human life & property.

Now one can argue that handling "most cases" might be better than what humans can do. But such observations ignore two very pertinent facts.

One is akin to the old adage, "humans err, but it takes a computer to really screw things up". E.g. when a driver loses control of a vehicle, we can plan for likely outcomes based upon past human behavior. But a self driving car's outcomes are likely to be wildly unpredictable.

The other is culpability and trust. When a human errs and someone dies or is injured or otherwise disadvantaged, we can trust that justice will be done and that human could be sent to prison.

Can an A.I. be sent to prison? No. But we could place a number on that human life and simply have the A.I. manufacturer pay up. But will they?

And further, there is already a huge part of business calculating the value of a life. To say nothing of businesses calculating how much they'll be pinged for if they break the law and decide to do it anyway because they'll make more money than they'll be fined if caught ... and convicted. Do we want more of this?

A.I. as it currently is a sociopath - it acts without feelings and experiences no remorse. We've enough of those already. ;-)

Using AI to process medical imaging.

Does AI do the first pass then the trained/experienced radiologists review the negative cases? Or is it the other way round?

I had this sort of argument with a line manager in the health sector who was convinced AI was going to save time and money. But the counterpoint was that behind every single data point is a patient who has presented for a reason.

How horrific it would be to be discharged or sent back to a GP when you know something is wrong, but the AI has determined you have a clear result. How would you fight against that. (Probably not that much different to what happens these days anyway).

I think automated reading of liquid cytology has proven to be more sensitive and specific than a histopathologist reading Pap smears. Happy to be corrected.

Have a friend who uses ChatGpt, 3.5 I think, for Python coding. He is not a professional coder but is a dab hand at it. The coding is ancilliary to his job but the key point is that he knows how to phrase the question to obtain the right coding answer. He may have to panel beat it a bit but it has helped tremendously to speed up his coding, rather than doing it from scratch himself.

You cannot be Joe Blogs who knows nothing or very little about coding and expect ChatGPT to coming with some code that'll work straight off if you don't phrase your requirements carefully.

I wonder if Boeing used AI for their 737 Max software?

No they just had offshore developers, less redundancy or optional extra pitot tubes and self audits. Found a good long write up at some point cant find it now, it referred to the MCAS having 10x too much power, then if disabled you have to be physically strong to even wind back the stabilizer. Note that Steve Bazer in the link below has screenshots including 'two pilot effort' ie prolonged and physically demanding turning is going on, while trying to fly the plane 30 odd seconds from the ground.

I think that there should be clear rules that heavily fine users of frt where the public suffer. These fines need to go directly to the victim. This will force users to act carefully

How about just dishing out some decent punishment for theft instead of spending billions pussyfooting around.

Looked into this 15 years ago. Quality cameras and software killed it. All solved now. Criminally inclined expect the be banned in the short term for other than pre paid collection or delivery. When you are banned reflect that your own actions got you there.

Can this part of the population stop blaming others and look inwards....

Missing the point that this lady was not a shoplifter, and wrongly harassed.

Don't forget facial recognition tech is also ableist as well as racist. Much of the NZ facial recognition tech predominantly discriminates against the disabled first as they have non standard facial structure, features and limited facial movements to enable identification. In many cases Real Me denies disabled people access to verified identity if they have any degree of facial paralysis, head and neck paralysis, need orthodic support, need medical equipment around the face, have disfigurement, have deep skin rashes or scarring, have significant difficulty with positioning, cannot stand in direct light or change positions, cannot move facial muscles on command etc. In many cases this can render disabled people as not being valid enough for existence which is a very big issue. As without verified identity government services are restricted to you.

Here is another issue, the errors with facial recognition tech are well know to act with such discrimination and bias they are illegal to solely use in America due to ADA act but completely legal to use to deny disabled people access in NZ. This is also a long standing issue that already has solutions but since widespread discrimination and denial of equitable treatment & access for disabled people is completely legal in NZ (and the discrimination against disabled people is approved in NZ bill of rights and govt department policy) there is literally no impetus to put in place the more accessible and accurate tech. Hence in NZ unless something happens to a lot of more widely known Maori people then nothing will change but then it will only change for some iwi, but not the wider community at large. Not the many other tangata whenua, cultures or ethnicities or those with more denial of access to services. Nope just a limited group of consultants and management and much of NZ inequity continues in much the same way as before.

Nobody cares UNTIL it happens to them and then its their problem. I agree with an above poster ...there needs to be punishment for incorrectly labelling someone...

What I don't see Juha, is comparison between FRT and human observation.

Supermarkets need systems, sadly, to deal with outrageous blatant theft. I have no problem with them doing their best to keep offenders out, using whatever means. AI or Human, there will be errors.

Blame the offenders, not the response. I believe we have had supermarkets close in the high theft neighbourhoods. That should not be.

The "research" you quote is deficient. It does not compare to human accuracy. Which may be worse.

A commentator above referred to health services. And how inaccurate AI might be.

I have worked there, human assessment was often blatantly wrong.

Overall, I think we are learning the "I" in AI is not intelligent. It's capable of vast work output, thus very useful. But also dumb and needing human overview.

Yes, it does seem to be sinking in that AI needs human support to work as intended. I was reading about "AI chatbots" that humans would drive on the quiet, and there's Amazon's cashier-less stores that needed some thousand people in India and were closed down eventually.

Also the task of human review of results often needs to be more skilled to detect false positives and false negatives requiring training to understand both the original task AND training in the coded procedures & flaws of the AI. A skill raising difference that also often means increased time/pay or you get vastly more incompetent under performing output. So often companies are fooled into higher costs and more staff resourcing costs by AI providers because of the AI not being accurate with very easy to trigger flaws in system output and not foolproof in AI sense (there are limited checks and balances, by design, to prevent known errors). You can tell a human to compare two pictures. But having to get them to review comparison results for false negatives and false positives and they need training in AI failure points as well as the ability to compare two pictures requires more time and skills.

The output of AI almost needs more checking in many fields and can leave companies open for criminal & liability costs. Particularly in America. Not so much in NZ though where the right to sue has been removed, discrimination on the basis of medical condition and birth is openly allowed, access to justice is limited and HRC functions are limited in scope and toothless.

This is true for any digital automation tools e.g. checking a file has been attached, versus checking that file contains expected contents and not just malicious content or false content or even just a picture of a dog. E.g. if it is a passport checker we still need to check for valid passport details & non malicious photo contents & photo matches & check for fraudulent passports (which are easier to make in an image versus the real thing), and valid use (e.g. not identity theft) hence the systems of checks and balances for good financial governance. If we don't expect a bank, bar or financial company to have automated approvals, (with specific govt laws, and govt public dept & corporate funding for QA of the verification processes) why expect it of say our immigration system, security checks or investment companies. Is it that having a single drink as a teenager and setting up a savings account is much more risky then evading police, entering under false premises, identity theft or setting up a money laundering account for cryptocurrencies.

Then you get the difficult cases e.g. with reference to the above use case the issues with identical twins & family resemblance (with one that could be malicious in intent). How do you stop identity theft and false matches with identical twins or strong familial resemblance. This is where our current systems of identity verification fall down. Because no one is paying to go the extra mile to prevent those cases. It means siblings, children can often break facial ID tech with false matches. Now it is a comforting cognitive bias to think "birds of a feather... yada yada", and your family would be as upstanding and honest as you, but some people are unfortunately related to terrible people who would commit theft or even steal from their own parents or siblings. A misguided hope that you may not be misidentified for something a family member has done is in short supply in general. Because no matter the system false matches will always happen until we get into detailed ID checks (as in criminal cases).

If you were setting up a rights abusing heavily biased system of ID facial recognition the false match of the parent and child or of siblings would not matter because you would be likely to apply similar treatment to both no matter who is the original target e.g. take in for questioning, assign for "reeducation" & work in a secure facility etc.

If you were setting up a heavily insecure budget system of ID facial recognition the false match of the parent and child or of siblings would not matter because you would be likely to apply the same functions e.g. unlock and release data or finances etc.

We welcome your comments below. If you are not already registered, please register to comment

Remember we welcome robust, respectful and insightful debate. We don't welcome abusive or defamatory comments and will de-register those repeatedly making such comments. Our current comment policy is here.