By Mark A Gregory*

This week’s Optus outage affected 10 million people and hundreds of businesses. One of the early reasons given for the failure was a fault in the “core network”. The latest statement from the company points to “a network event” that caused the “cascading failure”.

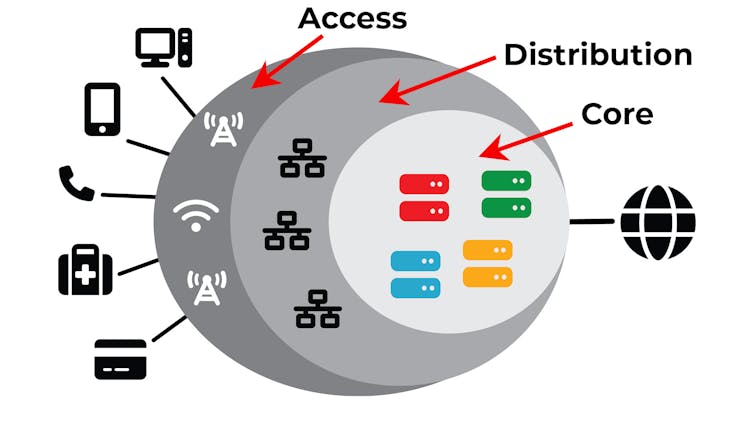

The internet is complex, so most carriers, including Optus, use the concept of the “three layer network architecture” to explain it. This abstraction splits the entire network into layers.

The access layer

This layer consists of the devices you use to connect to the internet. They include the customer equipment, National Broadband Network firewalls, routers, mobile towers, and the wall sockets you plug into.

This layer generally isn’t interconnected, meaning each device sits at the end of the network. If you want to call a friend, for example, the signal would have to travel deeper into the network before coming back out to your friend’s phone.

An outage in the access layer might only affect you and your local neighbourhood.

The distribution layer

This layer interconnects the access layer with the core network (more on that later). Remember that the access layer regions aren’t connected to each other directly, so the distribution layer is the interconnecting layer.

Another term for the interconnection cables is “backhaul.”

It is a bit more abstract but generally includes large switches in local exchange buildings, and the cabling that joins them together and to the core network.

The main purpose of the distribution layer is to route data efficiently between access points. An outage in this layer could affect whole suburbs or geographic regions.

The core layer

The core layer is the most abstract. It is the central backbone of the entire network and connects the distribution layers together and connects telecommunication carrier networks with the global network.

While physically similar to the distribution layer, with switches and cables, it is much faster, contains more redundancy and is the location on the carrier’s network where device and customer management systems reside. The carrier’s operational and business systems are responsible for access, authentication, traffic management, service provision and billing.

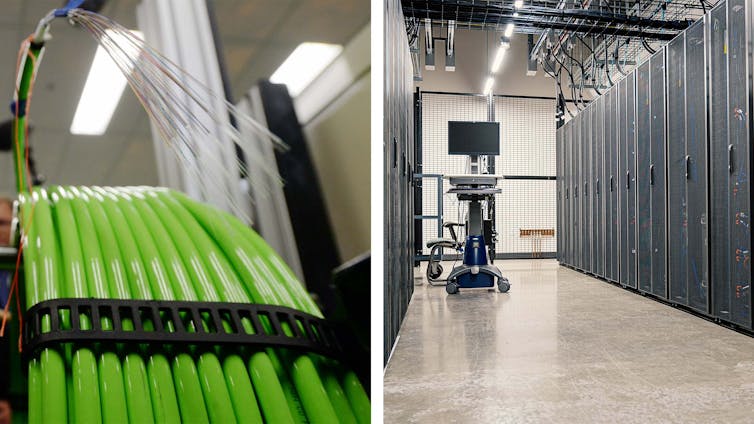

The core layer’s primary function is volume and speed. It connects data-centres, servers and the world wide web into the network using large fibre optic cables.

An outage in the core layer affects the entire country, as occurred with the Optus outage.

Why three layers?

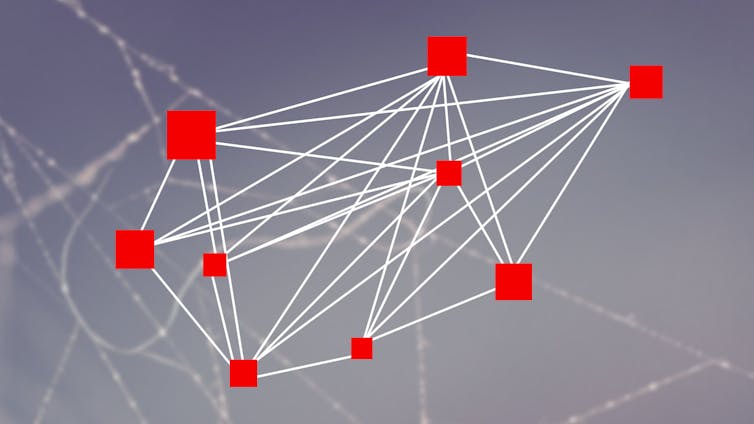

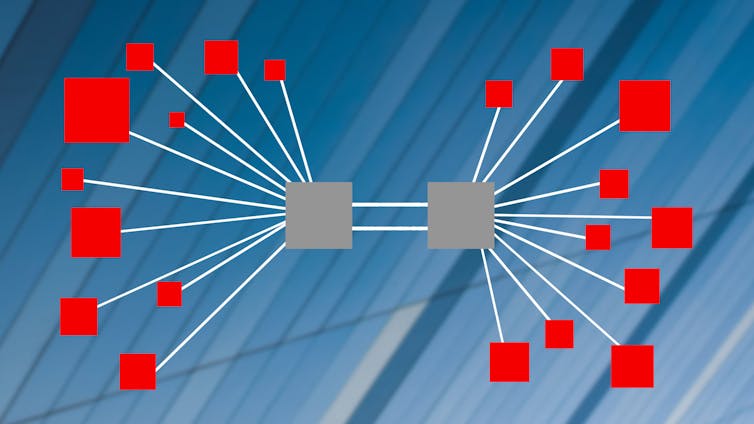

A big problem with networking is how to keep everyone connected as the network expands.

In a small network it may be possible to link everyone together but as a network grows this would be unwieldy, so the network is divided into layers based on function.

The three layer model provides a functional description of a typical carrier network. In practice, networks are more complex, but we use the three layer model to assist with the understanding of where equipment and systems are found in the network, e.g., mobile towers are in the access layer.

The core layer is designed to ensure that access layer traffic coming from and going to the Internet or data-centres is processed and distributed quickly and efficiently. Today many terabytes of data moves through a typical carrier core network daily.

Now you can see why a core layer failure could affect so many people.![]()

*Mark A Gregory, Associate Professor, School of Engineering, RMIT University. This article is republished from The Conversation under a Creative Commons license. Read the original article.

9 Comments

What went wrong is that the carriers aren't forced to load-share critical services when one suffers an outage. The cost of the downtime was astronomical and the government should waste no time in forcing the networks to collaborate in this regard or face massive fines and loss of the ability to operate. Payments, healthcare, transport, education... connectivity is too critical for a siloed approach.

Potential liablity for manslaughter if hospital equipment goes down should be enough to get people to see sense.

I wonder whether our Visa card transaction processing here might have been affected by this outage across the ditch. I made a Visa payment on 6 November (for a vehicle registration renewal) - the current license expiry being 8 November.

When I didn't receive the email confirmation of payment - I checked my Visa statement and the payment was showing as pending up until 9 November. So, three days to process that transaction.

Seemed odd. I never check this sort of thing, except this was a sort of time critical one as I had a registration expiring two days after making the payment :-).

The CEO has said it is "highly unlikely" to be a cyber attack. They may just not understand how it could be a cyber attack, hopefully a clear plain-english explanation is provided at some stage. The overall system is complex but each component performs simple functions so I'll be suspicious if a jargon non-explanation is given.

The fact that there was a cascading failure is interesting.

Some big players, goggle being one, have expirienced massive cascading failures when the firmware/software on the server/devices shuts down by design. I.e. the firmware/software has been programmed (or configured) how to behave in the event of Denial of Service attack or the like.

But as volumes slowly increase, just one server/device interprets this as an attack and shuts downs (or limits it's throughput) to protect itself. This obviously causes more strain on other servers/devices and they do the same until everything is shut down.

These preprogrammed settings can lie dormant for many years until one day ... bang! I wonder if we'll find something similar has occured.

The most interesting feature of the Optus outage is that the CEO was completely absent all day attending a private function at her "mansion". To the point where Govt ministers have had to intervene and call her out.

Couple this with the huge Optus hack and personal details leakage earlier this year and she should really be gone, but nothing will happen. I wonder why?

No it isn't, it is indicative but irrelevant.

This is a complexity problem, it isn't the first (SA blackout circa 2016, for instance) and it won't be the last. In the end, it will be the unravelling of social constructs. That's not an if?, it's a when?

https://en.wikipedia.org/wiki/Societal_collapse

She couldn't have done anything. Which is exactly the problem.

It was Pilot Error. Backup connection (out of band management) into their hardware to fix the typo was...on their own network. Saves money but kinda stupid when your own network is down.

So where are NZ networks core's. I would hope in NZ, but I suspect overseas.

Could be many things, most likely a change was made to natting tables, routing, load balancing etc that went wrong, it can be as simple as making a delete before an add by mistake, I have seen it first hand myself....... with similar consequences... often these network layer engineers are working huge hours. Sometimes you do not realise there is an issue until load appears on the network...... funny how networks MUST WORK 24 / 7 when governments can be missing in action for entire terms.......

We welcome your comments below. If you are not already registered, please register to comment

Remember we welcome robust, respectful and insightful debate. We don't welcome abusive or defamatory comments and will de-register those repeatedly making such comments. Our current comment policy is here.