This article is a re-post from Fathom Consulting's Thank Fathom its Friday: "a sideways look at economics". It is here with permission

by Erik Britton*

The [UK] Companies Act of 2006 (Article 155 (1)) states that a company must have “…at least one director who is a natural person”. I wonder whether any of Fathom’s directors fit that description. On closer examination, the legal definition of ‘a natural person’ is an entity to which can be accorded legal rights and responsibilities, and which can enter into a contract (that makes one a person, for legal purposes, and legal ‘persons’ include companies and other entities), with the added feature of being an individual human (that makes one ‘natural’).

I suspect that all Fathom directors fit the bill, though I can’t exclude the logical possibility that they are in fact aliens doing an exceptional job of imitating humans. Indeed, the same might be true of me: how would I know? (Aliens, or Terminators —two of my favourite film franchises, incidentally.)

Less facetiously, the question arises: human as opposed to what? A dog or a cat? Some companies might be much better run if dogs or cats were in charge. But the legislation is not aimed at excluding dogs and cats. No: it is aimed at “bots”. Bots, for legal purposes, are not natural persons since they are not human.

Bots are lines of code embedded in some platform that has an interface with the world including other bots. Bots propose deals and execute deals with each other — they have done this for a long time.

The purpose of bots in financial markets was originally to predict how humans would respond to market signals, more efficiently and with less bias than humans could, and to make money by placing trades based on those predictions. At first, the bots embedded a set of assumptions about human behaviour that humans had made — things like: if a trend in some price persists for a certain length of time then it is likely to mean revert (or, alternatively, it is likely to persist for even longer).

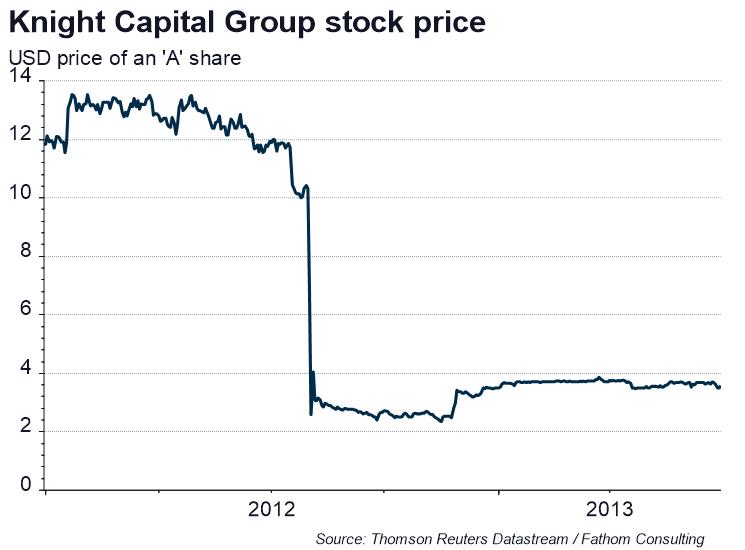

Those were like the early Terminators, clunky and easy to spot — sometimes very easy indeed, as the ‘glitch’ in the bot at Knight Capital in 2012 illustrated. A few faulty lines of code led to catastrophically bad trades being placed for twenty minutes, which lost $440 million and triggered the collapse of the company.

The current generation of bots is different. They are trying to predict how other bots will respond to market signals, based on a set of behavioural assumptions — a code — which they rewrite in real time without human intervention.

The most lively and interesting strand of economic thought at present is behavioural economics, which takes seriously (and not before time) the idea that humans don’t behave entirely rationally, at least not in the narrow definition of ‘rationality’ traditionally employed by economists. This strand of thought has always had traction in financial markets. A remark sometimes attributed to the greatest economist of them all, JM Keynes, stated that: “Markets can stay irrational for longer than you can remain solvent”. And so-called behavioural finance is a fascinating field in its own right.

There has always been a tension between economic fundamentalists who point to how the economy and markets will evolve in the long run, driven by things like productivity, demographics, natural resources and so on; and behavioural economists or financial analysts who argue that (a) in the long run we’re all dead and (b) fortunes can be made and lost in the short run. That tension is creative: it leads to a constant stream of new hypotheses, many of which remain in vogue for a couple of years before usually proving unsatisfactory — this is the mark of a strong paradigm that is still in its growth phase.

But when we’re thinking about behavioural economics or finance now, whose behaviour do we mean? The behaviour of humans or bots? Human behavioural idiosyncrasies spring from all the things that make us human, natural persons — biases, unconscious desires, mixed motives, and the ‘general mess of imprecision of feeling’ as Eliot put it. The endlessly fascinating patterns of financial markets, not quite repeating but rhyming, so to speak, reflect those idiosyncrasies. Take them out of the picture, and what remains?

How should we assess the question of whether bots are ‘rational’? What are their intentions? Is it right to think of them as desiring anything?

As trading becomes progressively more dominated by bots, the fertile tension between economic fundamentals and idiosyncratic behavioural factors may disappear. The bots aren’t interested in fundamentals. All they care about is behaviour — of other bots.

As Kyle says of the Terminator played by Arnie in T1:

“That’s what he does! That’s all he does!”

All they do is chase their own tails, faster and faster, in ever-decreasing fractions of a second. Humans aren’t involved in the code or in the transactions, and human behavioural responses are increasingly irrelevant to how that code is drafted. We have built a process that will eat itself in the end.

The first sign of the bot-world of finance terminating itself will be ever-larger gaps between prevailing market prices and economic fundamentals. Will the two concepts converge in the long term: like the drunk and her dog, will they ‘cointegrate’? I believe they will. The key step will be humans arbitraging the gap between market prices and economic fundamentals in a predictable way. And the final step will be humans predicting how other humans will do that: back to the future (enough SF references already, Ed.).

Along the way, we will encounter interesting legal dilemmas too. Since bots aren’t natural persons, they can’t enter into contracts with one another except as agents for natural persons, human beings. Since the bots design their own strategies and strike the deals that correspond to those strategies, a potential legal minefield has opened up relating to the meaning of contracts written between bots. Disputes about the meaning of a legal contract often invoke the intentions of those who drafted the contracts: whose intentions are relevant here, those of the natural persons who commissioned the original code (however imperfectly they are reflected in the contract) or those of the bots (if such a concept even makes sense)?

In the case of Knight Capital, the disastrous contracts into which the company entered were honoured, with painful effects for the humans involved. That was because humans had written the relevant code. What if they hadn’t? Would the contracts be honoured then?

The law is a human domain, which means humans will be held accountable for the behaviour of ‘their’ bots, by other humans. Which means, in turn that the humans will take control again in the end. That is, if the bots let us…

This article is a re-post from Fathom Consulting's Thank Fathom its Friday: "a sideways look at economics". It is here with permission

We welcome your comments below. If you are not already registered, please register to comment

Remember we welcome robust, respectful and insightful debate. We don't welcome abusive or defamatory comments and will de-register those repeatedly making such comments. Our current comment policy is here.